The open-source library scikit-learn is an ML mainstay: 29% of all Python developers use it, according to the 2021 Python Developers Survey, making it the fifth-most-used data science framework or library out of the 14 the survey asked about.

On Monday, October 17th, the scikit-learn team announced some big news: The “Pandas DataFrame output is now available for all sklearn transformers.” They called this “one of the biggest improvements in scikit-learn in a long time.”

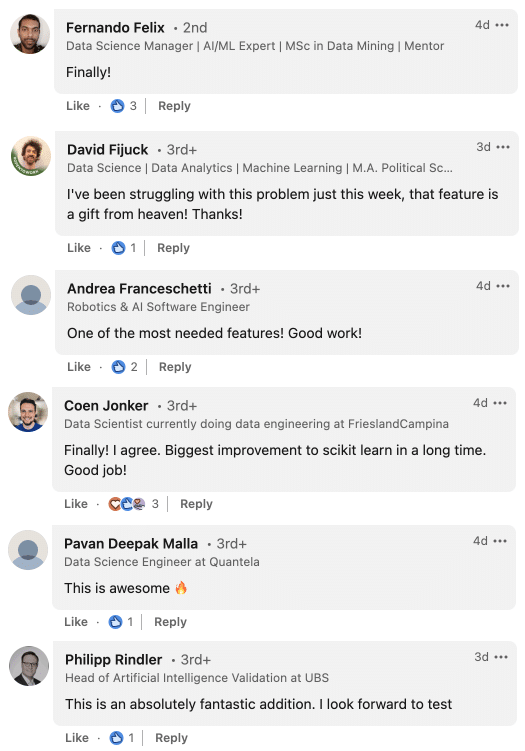

Data scientists greeted the announcement on social media with a lot of enthusiasm:

Our goal in this article is to understand the implications of this improvement. To do this, we’ll tackle three questions:

- What are transformers, and what about sklearn’s transformers implementation has changed?

- How does enabling outputting Pandas dataframes change the user experience?

- What does this change suggest about the trajectory of Pandas use in ML workflows?

Try Ponder Today

Start running pandas and NumPy in your database within minutes!

Part 1: What are transformers, and what about sklearn’s transformers implementation has changed?

First of all, some group therapy. If you hear the word “transformers” and get anxious, there might be a good reason: The word has multiple meanings in data science contexts, and keeping track of what kind of transformer someone is talking about can get confusing.

If someone talks about transformers and then goes on to mention GPT-3, BERT, Hugging Face, etc., they’re likely talking about the group of deep learning models (often natural language processing models) that have taken the data science community by storm since they were first ushered in by Google Brain in 2017. This is what you learn about when you read the Wikipedia article on ML Transformers.

But this is not what sklearn’s announcement is about! It’s about transformers that have been around a lot longer than that: Search the sklearn Github repo for the word “transformer,” and you’ll see lots of pre-2017 mentions of the term, including one as early as 2011.

This kind of transformer we’re talking about here refers to data transformations that can be used again and again for tasks like pre-processing data and feature engineering. That includes performing transformations, such as scaling variables so that they have a mean of zero and a standard deviation of one, filling in missing values, and other essential transformations required in a ML workflow.

Until now, if you used an sklearn transformer, you’d get a Numpy array back (we’ll see in a moment why this can be hard to work with). But after this recent change, sklearn transformers can now return a Pandas dataframe instead!

Part 2: How does enabling Pandas dataframe outputs change the user experience?

To understand why it’s often easier to work with a Pandas dataframe instead of a Numpy array, we’ll first look at what working with sklearn transformers was like as a Pandas user before this change. (All code in this post is adapted from here.)

First, we load in the famed Iris dataset. Before this update, sklearn already allowed you to feed in a Pandas dataframe into a transformer, so even before, we were able to selectas_frame=True, and examine the input data with.head().

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

X, y = load_iris(as_frame=True, return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, stratify=y, random_state=0)

X_train.head()Here are the first five rows of the dataframe:

sepal length (cm) sepal width (cm) petal length (cm) petal width (cm)

60 5.0 2.0 3.5 1.0

1 4.9 3.0 1.4 0.2

8 4.4 2.9 1.4 0.2

93 5.0 2.3 3.3 1.0

106 4.9 2.5 4.5 1.7There’s nothing new about the above process. But until now, we’ve only had the option of applying a transformation and getting back a Numpy array, as follows:

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

scaler.fit(X_train)

X_test_scaled = scaler.transform(X_test)

X_test_scaled[0:5]array([[-0.89426443, 0.7983005 , -1.27141116, -1.32760471],

[-1.24446588, -0.08694362, -1.32740725, -1.45907396],

[-0.66079679, 1.46223359, -1.27141116, -1.32760471],

[-0.89426443, 0.57698947, -1.15941899, -0.93319694],

[-0.42732916, -1.4148098 , -0.03949724, -0.27585067]])In the example above, we’re applying the StandardScaler transformer to normalize our dataset.

While Numpy outputs like the one above can be useful in many situations, working with an array can be frustrating and error-prone because they don’t automatically provide metadata information. So you have to keep track of your data and the column labels separately and remember that column 1 corresponds to “sepal length”, column 2 is “sepal width”, and so on. In a previous job as a data scientist, I can remember sorting a Numpy array by feature importance, and then spending time trying to make sure I’d sorted the feature labels exactly the same way — You either end up zipping things, or poking around manually, and it is easy to make mistakes where you index on the wrong columns.

And if you are used to working with Pandas dataframes, you’d have to take additional steps to convert the array into a Pandas dataframe so you can then work with it in Pandas. These workarounds might not have been too painful, but they weren’t as frictionless as native support.

Now, with the recent sklearn improvement, all you have to do is use your transformation's set_output method, and specify that you'd like the output to be a Pandas dataframe, and you get back a Pandas dataframe when you run the transform method:

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler().set_output(transform="pandas")

scaler.fit(X_train)

X_test_scaled = scaler.transform(X_test)

X_test_scaled.head()

And here’s the new output, complete with column names. Look at you, living your best Pandas life!

sepal length (cm) sepal width (cm) petal length (cm) petal width (cm)

39 -0.894264 0.798301 -1.271411 -1.327605

12 -1.244466 -0.086944 -1.327407 -1.459074

48 -0.660797 1.462234 -1.271411 -1.327605

23 -0.894264 0.576989 -1.159419 -0.933197

81 -0.427329 -1.414810 -0.039497 -0.275851If you’re all-in on Pandas, instead of specifying set_output every time, you can use a global parameter to set all transformer output to Pandas dataframes just once.

Overall, the problem this new feature solves might not sound like that much of a pain point, but if you’ve lived in an apartment with no washing machine, and then moved to a place that has a washing machine in-unit, you know how taxing a seemingly minor daily friction can be. And for evidence that this was a real problem for Pandas users (well, for additional evidence beyond the exclamations of joy we cited at the beginning), all you have to do is look to the existence of the sklearn-pandas library on Github, which has 2700 stars, and was built in part to solve inconveniences like this.

Part 3: What does this change suggest about the trajectory of Pandas use in ML workflows?

As mentioned above, for many Python implementations of ML algorithms, you’ve long been able to feed in a Numpy array, and get back a Numpy array, since these arrays are well-suited for linear algebra operations (given that they’re lightweight and typically numerical).

But most modern datasets aren’t simply pre-cleaned and transformed numeric matrices (and this is not to slight Numpy! In fact, Pandas is built on Numpy!). How often do you download a pristine CSV file of numbers with no column names? So this change by scikit-learn represents a growing acceptance by ML toolmakers that people want the flexibility provided by Pandas, and they’re excited when they can get ML tools to fit into their existing workflows instead of having to fit their workflows to the tools.

This isn’t the first time something like this has happened — Tensorflow has an “as_dataframe” function, sklearn lets you load its pre-loaded datasets as pandas dataframes with “as_frame = True” as we saw above, etc. — but it’s one important milestone towards a Pandas-native future.

Conclusion

Sklearn now allows transformers to output Pandas dataframes, a win for all data scientists and developers who use Pandas. And more generally, this serves as a sign that when the Pandas community needs other tools to better interoperate, toolmakers take that seriously.

Here at Ponder, we care about the Pandas API and Python because we care about making data scientists’ workflows simpler, more scalable, and more reliable. For more Pandas / Python / data science content, follow us on Twitter and follow us on LinkedIn. We’d love to hear your thoughts on which Python libraries still need better Pandas support!

Note: Photo by Iqram-O-dowla Shawon on Unsplash